Table of Contents

- Manage Multiple Kubernetes Clusters with Argocd

- Assumptions

- IAM Shenanigans

- Get IP & Certificate Authority of the Remote K8s Clusters

- Create Argocd Helm Chart Values File

- Run Helm Install/Upgrade

- Confirm everything is working

- Troubleshooting

Manage Multiple Kubernetes Clusters with Argocd

Argocd makes it easy to manage multiple kubernetes clusters with a single a single instance of Argocd. Lets get to it

Assumptions

- You have a remote cluster you already want to manage.

- You are using GKE

- This guide can still help you. Just make sure the argocd-server and the argocd-application-controller service accounts have admin permissions to the remote cluster.

- You are using helm to manage argocd.

- If not then dang that must be rough.

- You have the ability to create service accounts with container admin permissions.

- Or argocd-server and the argocd-application-controller service accounts have admin permissions to the remote cluster.

IAM Shenanigans

We need to

- Create a service account with the container.admin role.

- Bind the iam.workloadIdentityUser role to the kubernetes Service accounts argocd-server & argocd-application-controller so that it can impersonate the service account that will be created.

Here’s a simple script to do just that. Call it create-gsa.sh.

PROJECT_ID=$(gcloud projects list --filter="$(gcloud config get-value project)" --format="value(PROJECT_ID)")

SERVICE_ACCOUNT_NAME=argo-cd-01

PROJECT_NUMBER=$(gcloud projects list --filter="$(gcloud config get-value project)" --format="value(PROJECT_NUMBER)")

gcloud iam service-accounts create $SERVICE_ACCOUNT_NAME \

--description="custom metrics stackdriver" \

--display-name="custom-metrics-stackdriver"

echo "Created google service account(GSA) $SERVICE_ACCOUNT_NAME@$PROJECT_ID.iam.gserviceaccount.com"

sleep 5 #Sleep is because iam policy binding fails sometimes if its used to soon after service account creation

gcloud projects add-iam-policy-binding $PROJECT_ID \

--role roles/container.admin \

--member serviceAccount:$SERVICE_ACCOUNT_NAME@$PROJECT_ID.iam.gserviceaccount.com

echo "added role monitoring.viewer to GSA $SERVICE_ACCOUNT_NAME@$PROJECT_ID.m.gserviceaccount.com"

# Needed so KSA can impersonate GSA account

gcloud iam service-accounts add-iam-policy-binding \

--role roles/iam.workloadIdentityUser \

--member "serviceAccount:$PROJECT_ID.svc.id.goog[argocd/argocd-server]" \

$SERVICE_ACCOUNT_NAME@$PROJECT_ID.iam.gserviceaccount.com

echo "added iam policy for KSA serviceAccount:$PROJECT_ID.svc.id.goog[argocd/argocd-server]"

# Needed so KSA can impersonate GSA account

gcloud iam service-accounts add-iam-policy-binding \

--role roles/iam.workloadIdentityUser \

--member "serviceAccount:$PROJECT_ID.svc.id.goog[argocd/argocd-application-controller]" \

$SERVICE_ACCOUNT_NAME@$PROJECT_ID.iam.gserviceaccount.com

echo "added iam policy for KSA serviceAccount:$PROJECT_ID.svc.id.goog[argocd/argocd-application-controller]"

Get IP & Certificate Authority of the Remote K8s Clusters

Get Public IP and Unencoded Cluster Certificate

In the console

- Go to the cluster details

- Look under the Control Plane Networking section the public endpoint and the text “Show cluster certificate.”

- Press the “Show cluster certificate” button to get the certificate.

Base64 Encode Cluster Certificate

- Copy the certificate to a file called cc.txt

- Run the base64 command to encode the certificate

- Be sure to copy everything including the BEGIN/END CERTIFICATE

base64 cc.txt -w 0 && echo ""Create Argocd Helm Chart Values File

Add the base64 encode cluster certificate and public IP to the CLUSTER_CERT_BASE64_ENCODED & CLUSTER_IP respectively.

Create a bash script create-yaml.sh and execute

PROJECT_ID=$(gcloud projects list --filter="$(gcloud config get-value project)" --format="value(PROJECT_ID)")

SERVICE_ACCOUNT_NAME=argo-cd-01

PROJECT_NUMBER=$(gcloud projects list --filter="$(gcloud config get-value project)" --format="value(PROJECT_NUMBER)")

CLUSTER_CERT_BASE64_ENCODED=""

CLUSTER_IP="" # Example 35.44.34.111. DO NOT INCLUDE "https://"

cat > values.yaml <<EOL

configs:

clusterCredentials:

remote-cluster:

server: https://${CLUSTER_IP}

config:

{

"execProviderConfig": {

"command": "argocd-k8s-auth",

"args": [ "gcp" ],

"apiVersion": "client.authentication.k8s.io/v1beta1"

},

"tlsClientConfig": {

"insecure": false,

"caData": "${CLUSTER_CERT_BASE64_ENCODED}"

}

}

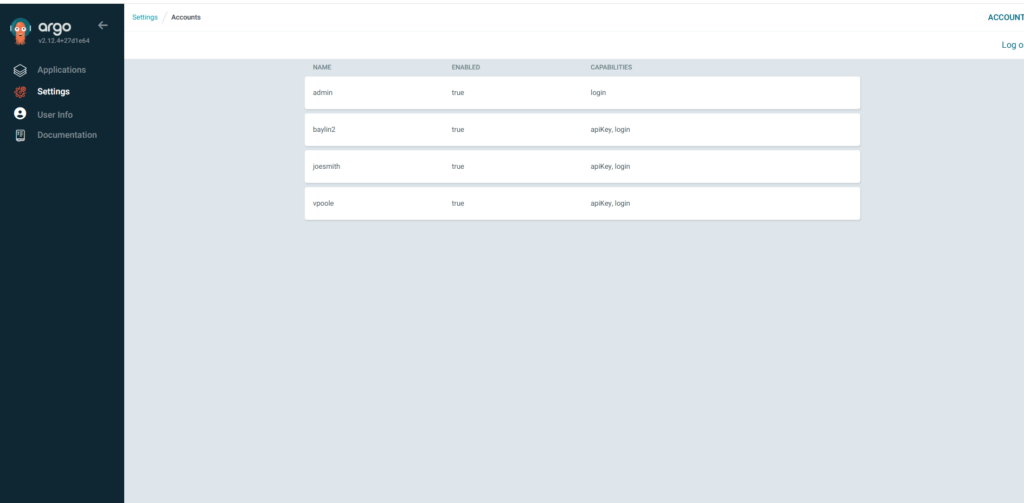

rbac:

##################################

# Assign admin roles to users

##################################

policy.default: role:readonly # ***** Allows you to view everything without logging in.

policy.csv: |

g, myAdmin, role:admin

##################################

# Assign permission login and to create api keys for users

##################################

cm:

accounts.myAdmin: apiKey, login

users.anonymous.enabled: true

params:

server.insecure: true #communication between services is via http

##################################

# Assigning the password to the users. Argo-cd uses bycypt.

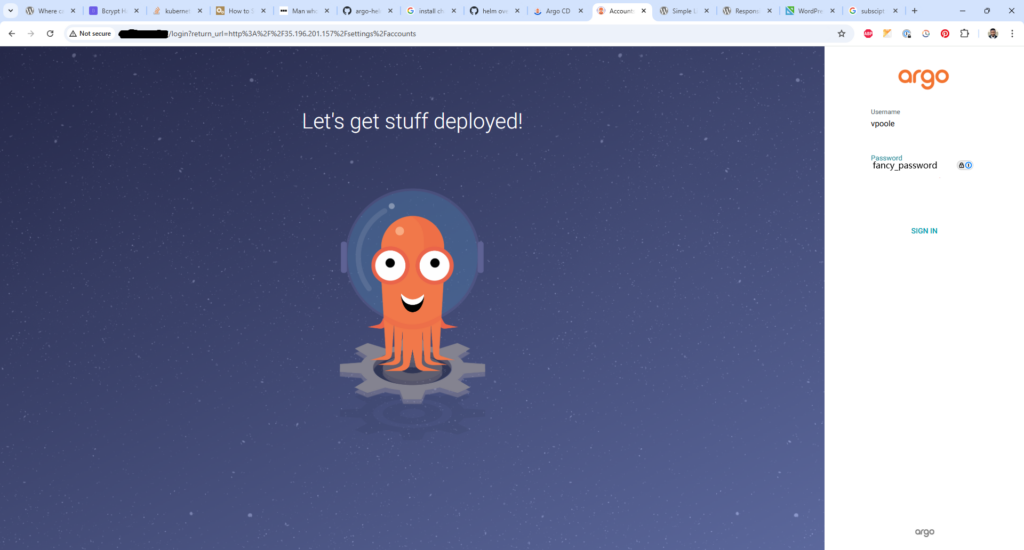

# To generate a new password use https://bcrypt.online/ to generate a new password and add it here.

##################################

secret:

extra:

accounts.myAdmin.password: \$2y\$10\$p5knGMvbVSSBzvbeM1tLne2rYBW.4L6aJqN.Fp1AalKe3qh3LuBq6 #fancy_password

accounts.myAdmin.passwordMtime: 1970-10-08T17:45:10Z

controller:

serviceAccount:

annotations:

iam.gke.io/gcp-service-account: ${SERVICE_ACCOUNT_NAME}@${PROJECT_ID}.iam.gserviceaccount.com

server:

serviceAccount:

annotations:

iam.gke.io/gcp-service-account: ${SERVICE_ACCOUNT_NAME}@${PROJECT_ID}.iam.gserviceaccount.com

service:

type: LoadBalancer

EOLRun Helm Install/Upgrade

helm install --repo https://argoproj.github.io/argo-helm --version 7.6.7 argocd argo-cd -f values.yaml

If you run helm upgrade make sure you delete the argocd-server and argocd-application-controller pods to make sure the the service account changes took effect.

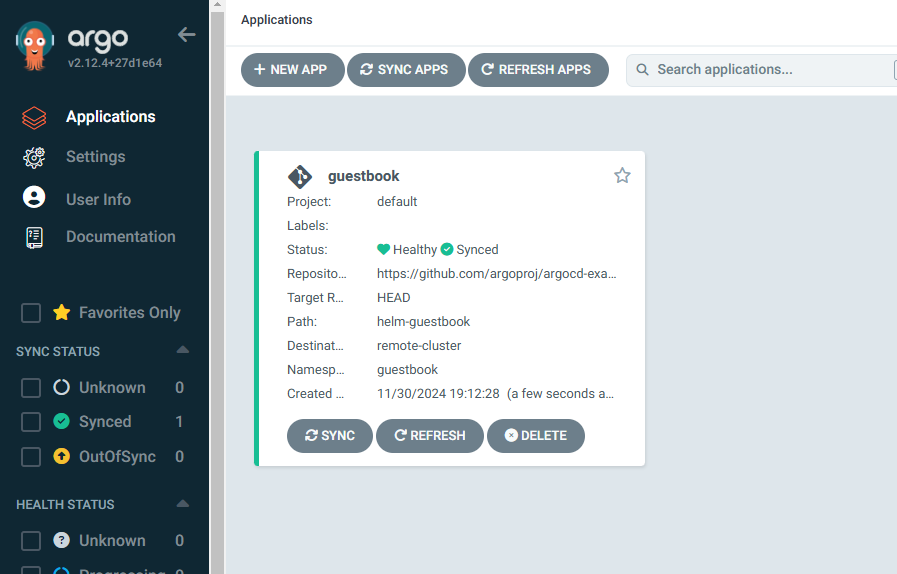

Confirm everything is working

You can create your own application on the remote server or can run this script to create one. Create a bash script called apply-application.sh and execute it.

YAML_FILE_NAME="guestbook-application.yaml"

cat > $YAML_FILE_NAME << EOL

---

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: guestbook

namespace: argocd

spec:

destination:

namespace: guestbook

name: remote-cluster #Name of the remote cluster

project: default

source:

path: helm-guestbook

repoURL: https://github.com/argoproj/argocd-example-apps # Check to make sure this still exists

targetRevision: HEAD

syncPolicy:

automated:

selfHeal: true

syncOptions:

- CreateNamespace=true

EOL

kubectl apply -f $YAML_FILE_NAMEThe Application should have successfully been automatically synced and healthy.

Troubleshooting

- If you did a helm upgrade instead of a helm install then you may want to delete the argocd-server and argocd-application-controller pods to make sure the the service account changes took effect.